I have had a number of business conversations recently where the moral concepts of good and evil have been important to the outcome of the conversation. These have been in conversations about ostensibly morality-neutral concepts like programming language selection, the use of patents, and the best business strategies.

What is interesting is that there was broad agreement in these conversations that certain behaviors were "good," others were "evil," and that others were neutral, despite the fact that we didn't explicitly define what made those actions good or evil.

Personally, I believe in Good and Evil in an absolute sense. But not everyone shares my moral and ethical code. So why did we agree? It seems too convenient that my particular moral absolutes would also end up being universally accepted.

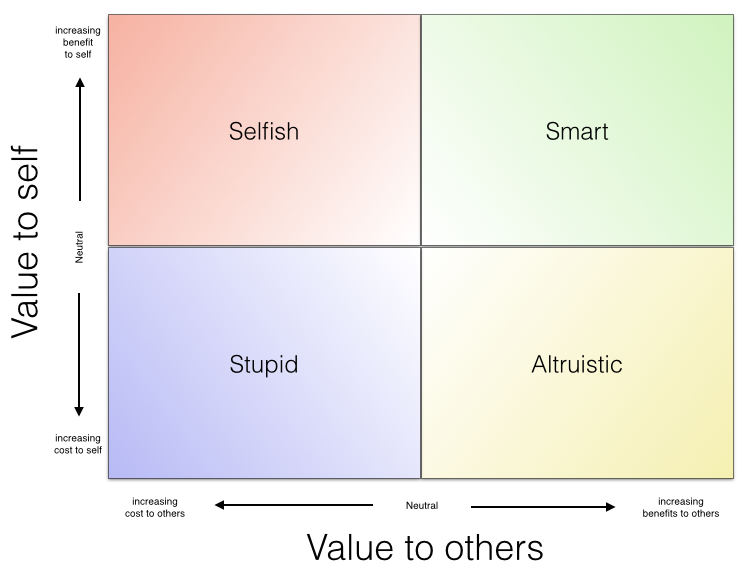

In pondering these concepts, I came up with a model that ties the idea of "Good" and "Evil" to the idea of externalities. Broadly speaking, "good" behavior creates value for others through positive externalities, and "evil" behavior imposes costs on others through negative externalities. But what is interesting - and one thing that distinguishes it from a pure utilitarian model - is the way in which it intersects with self interest as well.

As illustrated here, "good" behavior is on the right and "evil" behavior is on the left, while self-beneficial behavior is up and self-injuring behavior is down. 1

This model maps very easily to traditional notions of right and wrong behavior. Stealing, for example, imposes a cost on someone else in order to enrich yourself: that is pretty clearly in the "selfish" quadrant. Drunk driving is "stupid": it has a high risk of imposing costs on both others and yourself.

On the positive side, someone who is self-sacrificing in order to help others - with Mother Theresa being the archetypical example - is clearly "Altruistic." Finally, things like writing thank-you notes and being punctual are "smart": they produce benefits for you and those around you as well.

What is nice about the model, though, is the way in which it can apply to a number of situations that don't easily lend themselves to traditional descriptions of moral behavior. For example, patent licensing and litigation.

The ethics of patent licensing and litigation

I have long considered patent trolling to be "evil," but this model helps me explain why. Patent trolling is "selfish": trolls use the structural inequalities in the patent litigation system to extract money from others (a negative externality) in return for benefits to themselves. In the most common scenario, a patent troll identifies companies who are (allegedly) already using the patented technology, so there is no compensating transfer of value to the defendant.

This is in contrast to patent licensing that takes place before the commercialization of the patented technology. If someone recognizes the value of a patent, and licenses it with an eye to exploiting the technology in the marketplace, that is an interaction in which both parties gain something that they value - a "smart" behavior.

This is also in contrast to situations in which someone copies an innovation from someone else. The copying of someone else's work is "selfish" behavior, and litigation is justified because it forces the person doing the copying to internalize (and thus ethically neutralize) the previous taking.

Clearly, not every action is only harmful or only helpful. For example, I had a talk with a lawyer who specialized in bringing patent troll cases. He argued that what he is doing is good: his lawsuits are "allowed under the law" (and thus not a taking), and in pursuing the lawsuits, he creates jobs for people in his firm and provides for his family (both of which are positive in this model).

I disagree. In this model, it doesn't matter that an action is "allowed under the law" - what matters is the effect that the action has on others. I also think that benefits to one group don't necessarily translate into something being "ethical" under this model. I am not sure whether I internally use a utilitarian "maximize the sum of the utility for all participants" model, or whether I think that being selfish for an in-group (your firm, your family) is just an extension of being selfish for yourself. Either way, I see patent trolling as wrong.

A more nuanced argument for patent litigation has to do with global incentives. If patents are easier to enforce, then they are worth more, creating a greater incentive to create. More innovation benefits everyone, thus making the short-term costs to patent defendants part of a globally utility-maximizing increase in innovation.

I agree with this argument in theory. If we had a perfect patent prosecution system, such that every granted patent was novel, and nonobvious, and well-enabled, then I would agree there should be very few limits on the enforcement of patents. In the system we have, though, there are invalid and non-enabled patents that get through the patent office. Therefore it makes sense to restrain patent litigation and support the systems that allow patents to be challenged (such as Covered Business Method and Inter Partes Reviews).

The ethics of software development

A more nuanced application of this model applies to software development. Software bugs create costs - negative externalities. We don't know who will bear those costs, but we can reasonably predict that they will occur. Given the existence of bugs, what are our professional duties as software developers?

@Glyph gave a talk at PyCon 2015 called "The Ethical Consequences Of Our Collective Activities" (video) in which he argues that we need ethical principles to guide our work as software developers. Software programs perform actions on behalf of their users; thus, actions that do not reflect the wishes of the user are unethical. This includes both intended divergences from user interests (like spying on user activities) as well as unintended consequences (like security failures).

Examining Glyph's idea in the light of this ethical model, intended divergences are clearly on the "selfish"/"stupid" side of the axis; the only difference is whether the software developer benefits or not from the divergence.

A more interesting question is whether there is an ethical duty to minimize and mitigate "bugs" - i.e., unintended divergence from the user's wishes. I believe that the answer is a qualified yes.

Clearly, there cannot be an absolute duty of correctness in software. We are all human. Mistakes happen. But where mistakes can cause harm to others, it is reasonable to impose a duty of competence on those who practice a profession.

The closest analogy I can think of comes from the American Bar Association's model rules of professional conduct. The very first rule has to do with a lawyer's duty of competence:

Rule 1.1 Competence. A lawyer shall provide competent representation to a client. Competent representation requires the legal knowledge, skill, thoroughness and preparation reasonably necessary for the representation.

The commentary on rule 1.1 specifies that this rule requires that lawyers evaluate the matters before them and make a judgment as to whether they have sufficient skill to respond to client needs. Different matters require different levels of expertise. If the lawyer cannot reasonably handle the matter, they need to include someone else with the requisite expertise.

The commentary also addresses the need for adequate preparation - with "adequate" being scaled to the importance of the matter - as well as the need for lawyers to maintain competence as the profession and the law advance. This specifically requires that lawyers keep up with relevant technology advances. Comment 8 says:

“[t]o maintain the requisite knowledge and skill, a lawyer should keep abreast of changes in the law and its practice, including the benefits and risks associated with relevant technology, engage in continuing study and education, and comply with all continuing legal education requirements to which the lawyer is subject” (emphasis added).

Although there is no widely-accepted code of ethics for software developers, there is a parallel between a lawyer's duty of competence and the ethical duties of a developer. The developer of an application has a duty to reasonably avoid doing harm to others. The magnitude of the duty is related to the scope of the possible harm. Wasting a user's time through inefficient or buggy code is a lower possible harm than exposing a user's bank information through incorrect use of security protocols.

If you accept this framework for ethical behavior, and you agree that it applies to activities like software development, then it has a number of provocative implications. To some extent, there may be an ethical duty to comment your code, to write tests, and to use source control. There is also a duty to know your limits; if you can't be sure that you got the encryption right, bring in someone who can do a code review.

Other applications

There are a number of places where this model makes sense and leads to interesting results:

-

Activist shareholders. Activist shareholders are clearly self-interested; they are in it for the money. But are they "smart" or "selfish"? You can't say without looking at the specific situation. An activist shareholder that leads to company reform and greater efficiency may be doing good and thus be "smart." On the other hand, a corporate raider arbitraging the differential between the value of a company as a whole and the value in parts is more likely to be harming others and thus be "selfish."

-

Servant leadership. Does servant leadership require that someone act against their own self-interest (i.e., be "Altruistic")? I don't think so. While some aspects of servant leadership may require altruistic acts, the focus of "leadership" in general is about accomplishing a goal, and many leaders are rewarded for their progress towards those goals. Even if individual acts may be self-sacrificing, it is likely that servant leadership in general is beneficial to the leader as well (thus making it "Smart"). One measure of a good leader may be the amount that the leader finds win-win ("Smart") solutions.

-

Game theory. This ethical model directly applies to game theory. In general, interactions that can be modeled as positive sum games are "good," zero-sum games are ethically neutral, and negative sum games are "evil."

-

Capitalism and profit-taking. Building on the game theory result above, trade (and capitalism generally) is ethically positive due to the existence of gains from trade. Even in an ideal transaction, however, how "ethical" it is depends upon the split of the gains between the parties. Tim O'Reilly's maxim to "Create more value that you capture" (video) suggests an ethically positive interaction, whereas a transaction in which the vendor captures all the gains is at best ethically neutral. (See also "The Value of Switching Costs".) Although I would argue that repeated positive interactions accrue as benefits in other ways, such as goodwill, that may be more valuable over time than the money from a single transaction.

This model is incomplete. People will disagree about whether something is harmful or helpful at all, and which timeframes should be considered. For example, a doctor giving a shot is doing a short term harm (the pain from the shot) in return for a long term gain (improved health). But in the words of George Box, "all models are wrong, but some are useful." This one helps me evaluate a number of different situations to help me decide how I can act in ways that benefit the world.

<span style="font-size:smaller;>[1] Hugh Smith points out in email that this mapping was anticipated by Carlo Cipolla's "Basic Laws of Human Stupidity, specifically his third law. I'm sure there are others too. I'm a little more generous that Cipolla, though: what he terms "helpless" I term "altruistic". But the concepts are similar and his entire essay is worth reading. (return)